#12 Why Smart Money is Chasing HBM Right Now

Deep dive of tech terms that you hear about more and more, but perhaps couldn't explain to your parents. This week: HIGH BANDWIDTH MEMORY (HBM)

“Nvidia’s trillion-dollar empire depends on a few millimeters of stacked silicon”

Why you need to understand what “HBM” means

Every investor in AI should understand one acronym right now:

HBM: High-Bandwidth Memory

It’s the component that actually decides how many GPUs Nvidia can ship, how fast models can train, and which countries actually can control the global AI infrastructure

(Hope that intro is interesting enough to keep you reading on)

HBM is a high-performance memory component that goes into GPUs, like Blackwell from NVIDIA

But it’s not NVIDIA who builds HBM. It’s three suppliers:

They are the world’s three suppliers of HBM that goes into every new generation GPU

And one company, TSMC, assembles nearly every GPU that HBM goes into.

Control that ecosystem, and you control the speed of AI progress itself

The Memory Wall

GPUs are like Formula 1 engines, but if you don’t have good fuel lines of data feeding that engine, it’s like fuelling that high performance engine with gasoline car through a paper straw

GPUs can perform billions of calculations per second, but the data for those operations needs to arrive fast enough if you want to do really heavy and fast computations

But it’s technically not just raw data, it is storing that data in short term memory for the LLMs to quickly access it

If you remember from previous articles, LLMs are based on Attention and Context, which basically means that they generate the next word based on all the words it generated before that word. This required short-term memory

And the more an LLM generates (e.g. the longer the conversation you have with ChatGPT, the more data you put into the context window, or the more complicated a task you give an AI agent), the more short-term memory you need

But accessing that memory depends your “memory bandwidth”

The size of that bandwidth very quickly becomes the physical limit for every large model, training run, inference cluster, and data center build out

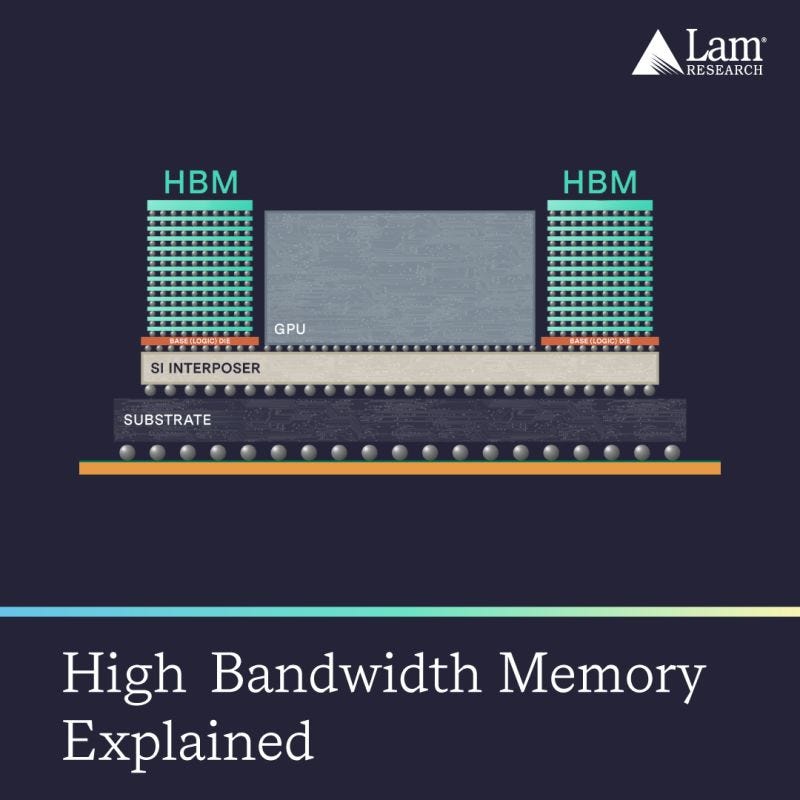

HBM’s task is to move memory closer and wider to the actual computing action

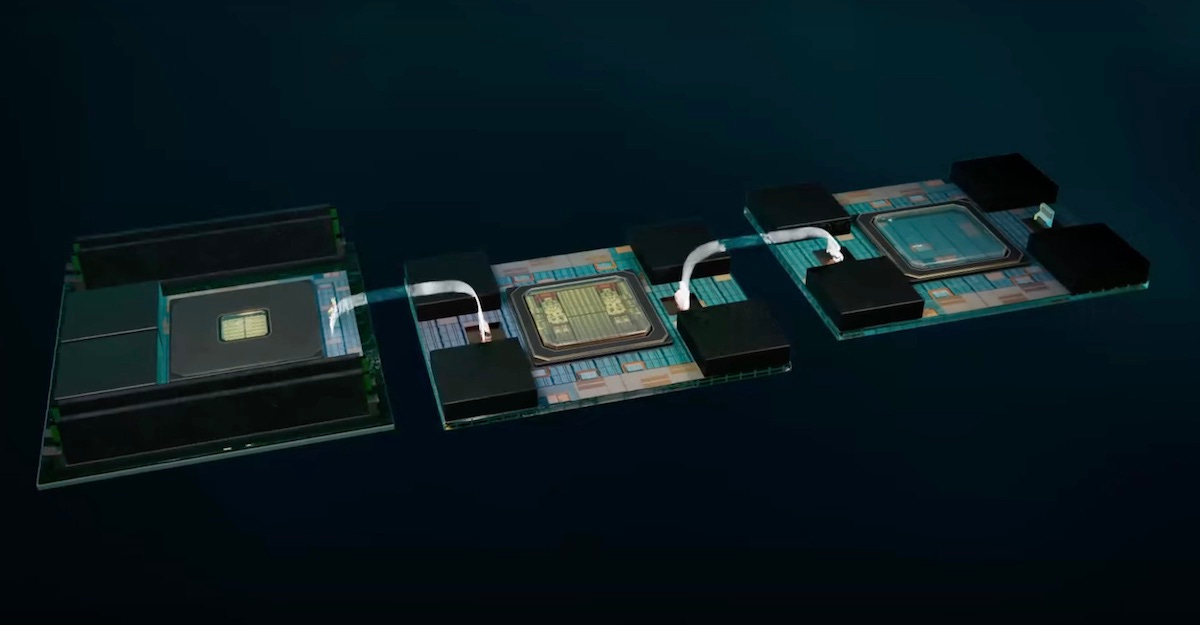

Instead of scattering lots of memory chips across a circuit board (think of what a classic circuit board looks like), HBM stacks them vertically like a skyscraper, linked by microscopic elevators and parked right beside the GPU

Thousands of parallel data lanes can then deliver terabytes per second of throughput, in order to keep the GPU engines roaring

Packaging: The New Battlefield

Each GPU die has limited “beachfront property” where you can connect stuff to it. Some industry analysts will use the term “the shoreline problem”

There is basically only so much physical space on the edge of the GPU where you can connect other things to it

Enter TSMC’s CoWoS-L packaging (“Chip-on-Wafer-on-Substrate”), which literally extends the physical silicon edge/interface so Nvidia can anchor up to eight HBM stacks around a single GPU

That packaging capacity, not transistor density (ref. Moore’s Law, which is quickly becoming less and less relevant in terms of AI performance), now caps output of modern GPUs

So, when physical supply of CoWoS to TSMC in e.g. Taiwan get bottlenecked, GPU shipments get constrained

The Real Players Behind Nvidia

Here’s a quick overview of the ecosystem underpinning a major value pool of AI’s supply chain today :

SK hynix: Supplies most of the HBM3 and HBM4 memory used in Nvidia’s H100, H200, and next-gen Blackwell (B100/B200) chips

Nvidia has effectively pre-purchased years of Hynix capacity, which means they have locked up some of the best-yielding memory chips on EarthSamsung: the “backup supplier”, racing to qualify its HBM4 as a second source by late 2025.

Micron: Developing U.S.-based HBM4 lines so Nvidia and the U.S. government have a domestic option

TSMC, meanwhile, assembles everything using CoWoS-L. Without TSMC’s packaging, even a warehouse full of HBM chips can’t become a single GPU

Nvidia may design the world’s best compute engines, but its ability to ship them depends entirely on this four-company ecosystem

So, the real engine of AI doesn’t live inside the GPU, but in the supply chain around it

Why Bandwidth Equals Money

In a data center, bandwidth determines your actual profits

A GPU waiting on memory is like an idle factory, with a massive cost of capital burning through your balance sheet really, really fast

Remember, GPUs require huge amounts of CAPEX upfront, so you need to make sure you’re feeding those GPUs with data continuously to make any return on that investment

Doubling memory bandwidth can lift GPU utilization from 60 % to 90 %, which is the equivalent of adding half again as many GPUs without buying any new ones (nice!)

That’s why Nvidia’s latest modules deliver up to 13 TB/s of memory throughput.

Faster memory translates directly into higher margins and lower energy per inference.

The KV-Cache Analogy

Let’s cover one more technical term, through I’ll probably write a separate post on it later:

KV-Cache

LLMs rely on remembering context (that’s the whole idea behind Transformers, really)

Every time an LLM generates a new word as part of an answer, it must flip through a growing library of everything it has already generated (aka. the “key-value cache” / KV Cache) to recall the context of what it is try to answer

Keeping that library in HBM let’s the LLM keep all that context readily available on your work desk, and accessible in the blink of an eye

But HBM is expensive

You can also store some of the context in in cheaper memory like LPDDR or flash but that would be like keeping all your notes in the basement: much slower but more affordable (unless your work desk is infinitely huge)

Modern AI systems move data dynamically between different types of memory storage, shuffling hot tokens into HBM and cold ones out, a kind of real-time cash-flow management for memory

This is why we’ve seen massive improvements in some LLM’s performance, even when you shrink the model size or constrain compute

In other words, if you manage the memory of an LLM efficiently, you can make much smaller models and get much more out of your existing compute capacity (super nice!)

To close off for today

The AI boom’s true bottleneck isn’t just algorithms or GPUs

It’s increasingly becoming “bandwidth per watt”

Basically, if you only have a certain amount of power (watts) available for your data center, you want to make sure that the bandwidth of your GPUs is maximised

So one could argue that “packaging of memory” is a new version of Moore’s Law

And the companies mastering HBM (SK hynix, Samsung, Micron, and TSMC) quietly define the limits of the world’s AI capacity (and the growth of companies like NVIDIA and AMD?)

If compute is the engine, HBM is the bloodstream

And whoever controls that bloodstream controls the future of AI

Other Related Articles You Might Want To Read

About Me

My name is Andreas. I work at the interface between frontier technology and rapidly evolving business models, where I develop frameworks, tools, and mental models to keep up and get ahead in our Technological World

Having trained as a robotics engineer but also worked on the business / finance side for over a decade, I seek to understand those few asymmetric developments that truly shape our world

If you want to read about similar topics - or just have a chat - you can also find me on LinkTree, X, LinkedIn or www.andreasproesch.com

![[Tech You Should Know]](https://substackcdn.com/image/fetch/$s_!ojWk!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F86c0a09b-5df9-4610-bb7b-e72d07a36d55_1024x1024.png)

The TSMC CoWoS packaging point is crucial. Everyone focuses on Nvidia but TSMC's packaging capacity is the real choke point. Even if SK Hynix makes perfect HBM chips, without TSMC's CoWoS-L they can't become GPUs. This "shoreline problem" means TSMC controls AI infrastructure as much as Nvidia does. Smart money should track TSMC's CoWoS capacity expanion, not just chip fab capacity.