#2 Why is Attention all you need?

Deep dive for understanding the mechanism that powers the LLM-engines of GPT, Claude, and every modern GenAI tool.

Why Is Attention Such a Big Deal?

Most AI breakthroughs come from Scale: more data, bigger models, faster semiconductors, etc. etc.

But the single most important idea in today’s Generative AI systems?

That’s not Scale. It’s Attention.

When researchers introduced the Transformer architecture in 2017 with the paper “Attention Is All You Need”, they meant what they were putting on the label

Attention changed how machines process information. Instead of reading from left-to-right (or right-to-left, or up-to-down), Attention allowed an AI model to “read” a whole piece of information at once

It’s the core mechanism that enables large language models (LLMs) to do what they do:

Understand long-range context

Generate high quality paragraphs and code

Reason across multiple instructions and draw conclusions

Process many inputs in parallel (not what humans are best at, for sure)

If you want to understand how modern AI works, you start with Attention.

What Problem Does Attention Solve?

Before Attention, most language models were based on recurrent architectures like RNNs (“recurrent neural networks). That’s just a fancy way of saying “guessing one word at a time"

These models passed context forward through a memory-like mechanism, but only remembering the last couple of words.

Hence, the longer the sequence, the more diluted the context became. Like a game of “Telephone”

Hence, these AI models struggled with:

Forgetting earlier inputs you just gave it

Processing sequences veeeery slowly (again, one word at a time)

Handling ambiguous or flexible grammar (hard to manage the grammar of a very long sentence when you keep forgetting what you just read)

What was missing?

A way for every word to dynamically evaluate the importance of every other word, regardless of position.

That’s where Attention made a huge difference

What Is Attention, technically?

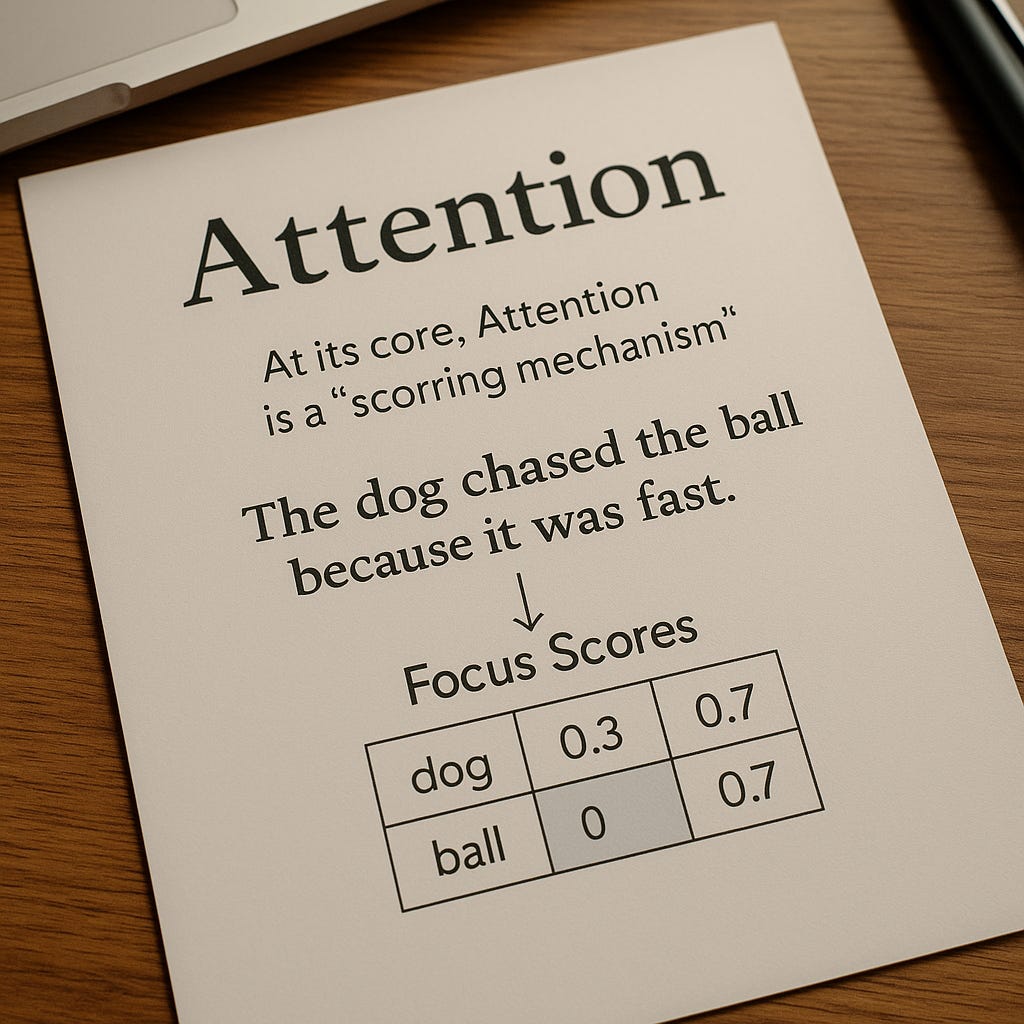

At its core, Attention is a “scoring mechanism”

For every word (or token) in a sentence, the model calculates how much focus it should give to each word.

Let’s take a sentence:

“The dog chased the ball because it was fast.”

To resolve what “it” refers to, the model needs to compare “dog” and “ball” and decide which one is more relevant.

It needs to pay attention to the full sentence

Here’s how that happens:

Each word gets projected into 3 “vectors” (sorry, gotta get a bit “Mathsy” here):

Query (Q): What am I looking for?

Key (K): What do other words represent?

Value (V): What information does each word carry?

To compute Attention (as a scoring mechanism):

Each Query is compared against all Keys (via dot product) to calculate similarity scores.

Those scores are normalized into probabilities.

The final Attention output is a weighted sum of the Values, based on those probabilities

In plain English:

The model decides what to pay attention to, and how much, based on how relevant the words are to each other.

What Is Self-Attention?

In LLMs, every word in the input performs this attention calculation with every other word in the same input. That’s why it’s called Self-Attention.

It’s self-referential (it reads and reacts to its own output)

It’s position-aware (thanks to positional encoding), meaning the position of a word in a sentence matters (e.g. “the cat ate the mouse” vs. “the mouse at the cat” both have the same words, but very different meaning)

It’s layered, and thus applied multiple times in transformer blocks to digest different layers of meaning

The result: each word ends up with a contextually informed representation, which evolves across layers in the LLMs Transformer stack

And because all words attend to each other in parallel, transformers are both faster and more scalable than RNNs.

What Is Multi-Head Attention?

Instead of doing attention once, LLMs do it many times in parallel, using different sets of weights

These sets of weights are called heads.

Each head focuses on different kinds of relationships:

Grammatical patterns (e.g., subject–verb)

Relationships (e.g., cause–effect)

Long-range dependencies (e.g., pronouns)

The outputs from these heads are then combined, giving the model a richer, multi-perspective understanding of the text.

Kind of like a piece of text being read by a small army of linguists, all with their particular focus and specialty, and then all merging their notes at the end

So, Why Does Attention Matter?

Let’s summarize what we have so far

For model capabilities, Attention:

Enables long-context understanding

Allows parallel computation (scales much better)

Improves performance and relevance of the outputs generated

In short, Attention is not just a component, it’s the “computational lens” through which LLMs understand the world.

Analogy: Attention as a Team of Analysts in a War Room

Imagine you're in a “war room”.

A team of analysts is reviewing a massive intelligence report filled with hundreds of pieces of information.

Each analyst is assigned one specific line in the report. But here’s the twist:

Before making any conclusions, each analyst is allowed to consult every other analyst, and they can ask each other questions like:

“Hey, how does your line relate to mine?”

“Do you have any detail that helps clarify my section?”

“What should I prioritize in my interpretation?”

Each analyst has:

A Query: What they’re trying to understand

A Key: A summary of what each of the others is about

A Value: The core information from each line

They use the Query to match with the Keys of others, figure out whose Value is most relevant, and blend that input into their final interpretation.

And this doesn’t happen one at a time. Rather, everyone is doing this simultaneously (in parallel)

That’s Self-Attention.

It’s a collaborative swarm, where every token (or analyst) weighs all others in real-time, and updates its meaning based on the most relevant context.

So, where is all this going?

Attention unlocked the Transformer era. But that was just the beginning

Next-gen models are already exploring:

Long-term memory (beyond context windows)

External tools (function calling, retrieval). See “Agents” in a post very soon

Multi-modal integration (images, video, audio, physics etc.)

Still, Attention remains the core innovation that made these advances possible.

Understanding “Attention” it is like understanding electricity.

It was once just important for a few scientists in their labs, now everywhere

About Me

My name is Andreas, and I work at the interface between frontier technology and rapidly evolving business models, where I develop frameworks, tools, and mental models to keep up and get ahead in our Technological World.

Having trained as a robotics engineer but also worked on the business / finance side for over a decade, I seek to understand those few asymmetric developments that truly shape our world

If you want to read about similar topics - or just have a chat - you can also find me on X, LinkedIn or www.andreasproesch.com

![[Tech You Should Know]](https://substackcdn.com/image/fetch/$s_!ojWk!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F86c0a09b-5df9-4610-bb7b-e72d07a36d55_1024x1024.png)