#10 What is this "Multi-Modality" thing?

Deep dive of tech terms that you hear about more and more, but perhaps couldn't explain to your parents. This week: MULTI-MODALITY

When ChatGPT first launched, it could only handle text

You’d type something in, it would type something back out, like a poem or an email

Magic! Sure, but still a conversation in a single language: WORDS

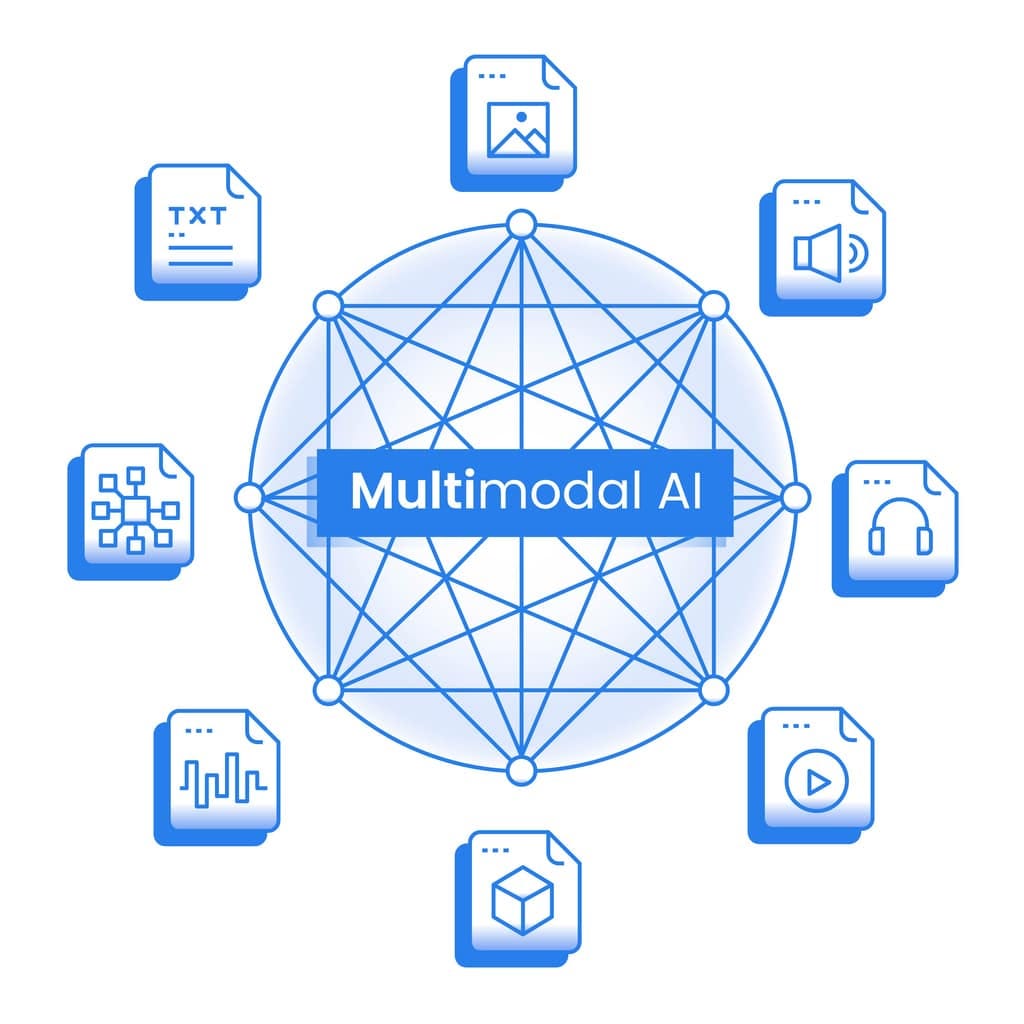

That’s now ancient past, as foundational models don’t just read and write text, but can also understand and generate images, audio, video, and even interact with the physical world

That’s actually a much bigger deal than it sounds

How does it really work?

At the core, an Foundational Model (a better term than “LLM”, for reasons we’ll see shortly) is a pattern recognition machine

It takes tokens (chunks of input), predicts the next token, and strings those predictions together into coherent output

Multi-modal models turn different types of data, e.g. pixels in an image, sound waves in audio, frames in a video, torques in a motor, into of numerical tokens

Once everything is converted into this common “token language,” the model can apply the same Transformer architecture that powers GPT-style LLMs

Once input is “tokenized”, the model doesn’t care if the input started as a sentence, a picture, or a sound clip

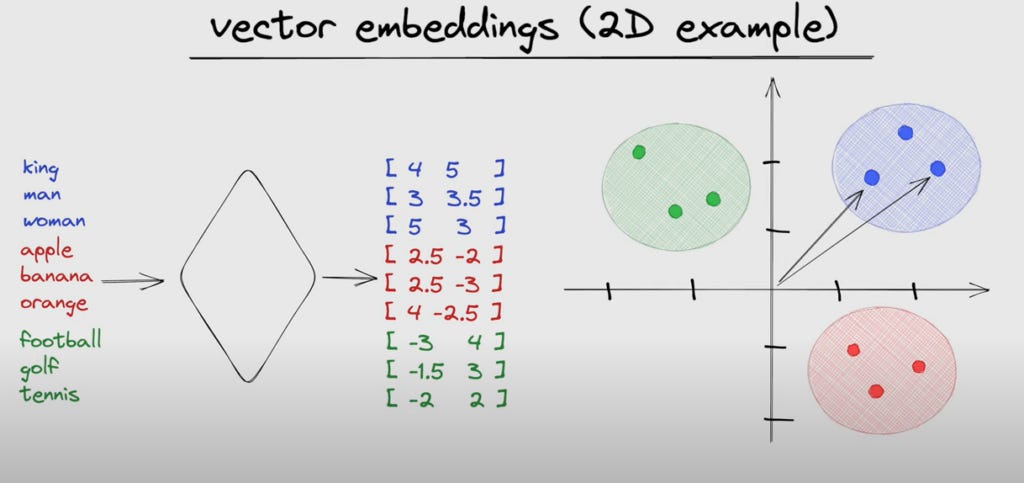

The first step of this “tokenization” is what we call vector embeddings. This is actually where your high school trigonometry becomes useful (and you said you would never use Trigonometry as an adult - pah!)

By converting inputs into a vector space, we generate the context that Generative AI needs to “do it’s magic”

I’ll do a separate piece on Vector Embeddings later, but just keep in mind that essentially any digital inputs can be converted into numbers into a huge multi-dimensional matrix, which the Foundational Model then uses to understand the world

You might also want to go back to the explainer on “#2 Why is Attention All You Need?”

Let’s understand a bit more high-level

A text-only model can draft contracts or summarize reports. A multi-modal model can:

Look at an engineering drawing and the compliance text next to it

Analyze a patient’s X-ray and their medical history

Review a factory floor’s video feed and the maintenance logs

Multi-modality therefore opens the door to unifying data silos

Instead of building separate ML pipelines (like we did in the past) for text classification, image recognition, audio transcription, robotics, etc.

We can now build on foundational models that handles them all in one go

That doesn’t mean we won’t have specialized models that get really good at certain data types, but it massively reduces integration complexity and accelerates deployment

Beyond words: Robotics and Physical AI

I’ve written some posts about VLAs and World Models in the past, so let’s stretch this concept one step further: what if your AI doesn’t just process multiple modalities, but also outputs them into the physical world?

That’s the frontier of robotics and “physical AI”

Robots operate in a multi-modal universe by default. A humanoid robot needs to:

See through cameras

Hear through microphones

Sense balance, torque, and touch through sensors

Plan actions in 3D space

Communicate with humans in natural language

Historically, robotics systems stitched these capabilities together with brittle pipelines: one model for vision, another for control, another for planning

They barely talked to each other

A multi-modal foundational model, however, can learn directly from the joint distribution of all these signals

Again, that’s why you’re seeing companies like Tesla (with Optimus and FSD), Figure, and others talking about “world models”

In practice, that means the same model that reads instructions (“pick up the red cup”) can also see the cup, understand its position in space, plan the movement, and control the actuators to grab it

This is multi-modality going full circle: from language to perception to action (starting to sound familiar?)

The bigger picture

The first wave of AI disrupted how we interact with text (from the internet)

The next wave is disrupting how we interact with physical reality

Multi-modality is the bridge: it takes AI out of the abstract world of documents and code, and plugs it directly into the sensory and physical world that humans live in.

That’s why this isn’t just a technical curiosity, but a term you need to understand as more and more of the data around us becomes “tokenized”

About Me

Working at the interface between frontier technology and rapidly evolving business models, I work to develop the frameworks, tools, and mental models to keep up and get ahead in our Technological World.

Having trained as a robotics engineer but also worked on the business / finance side for over a decade, I seek to understand those few asymmetric developments that truly shape our world

You can also find me on LinkTree, X, LinkedIn or www.andreasproesch.com

![[Tech You Should Know]](https://substackcdn.com/image/fetch/$s_!ojWk!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F86c0a09b-5df9-4610-bb7b-e72d07a36d55_1024x1024.png)